Java Out of memory Error when Crawling SES Index

When you schedule a search definition, it might run forever, especially in case of a large index like HC_HR_JOB_DATA or HC_BEN_HEALTH_BENEFIT or HC_HPY_CREATE_ADDL_PAY. If you go to the crawler log located in 1ds*.MMDDHHMM.log in <SES>/oradata/ses/log – you might notice an “out of memory” error like the one mentioned below:

Crawl Fails and Logs Error “java.lang.OutOfMemoryError: Java heap space”

You may also see something like this:

ERROR Thread-2 EQP-60329: Exiting FeedFetcher due to error: java.lang.OutOfMemoryError: Java heap space

This error is because larger indexes require a crawler to use more memory and hence, the solution is to increase the memory availability for the crawler. To do so in case of SES 11.2.2.2, apply the SES mandatory fixes.

If you’re using 11.1.2.2, use the following steps:

1. Login to the SES database as sysdba

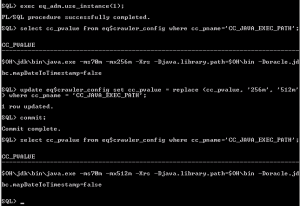

2. Execute the procedure exec eq_adm.use_instance(1)

3. Run the command

select cc_pvalue from eq$crawler_config where cc_pname=’CC_JAVA_EXEC_PATH’;

You may see a max heapsize set to 256 (‘-mx256m’)

4. Increase the heapsize by run the command

update eq$crawler_config set cc_pvalue = replace (cc_pvalue, ‘256m’, ‘512m’) where cc_pname = ‘CC_JAVA_EXEC_PATH’;

5. Commit

6. Verify that the max heap size got updated.

7. Restart SES

EQA-13000: Operation “delete” cannot be performed on an object with type “schedule” in state “LAUNCHING”. (262,1018)